Context

Cognitive Ergonomics course work

Duration

Two months

My Role

Research design, engineering of the driving task, and experimenter during the trials

Team

Five students

Brief

Driver distraction kills, and technological devices can turn into a dangerous source of distraction.

When designing any system intended for use while driving, usability and attentional demands must be carefully considered.

In this study we placed participants in a simulated driving setting and performed experiments on two infotainment systems to measure and compare their usability and their impact on visual attention.

Methods used include gaze detection algorithms, usability questionnaires, measures of performance, and methods of statistical analysis.

Driver distraction kills, and technological devices can turn into a dangerous source of distraction.

The attentional demands and usability of in-vehicle systems must be carefully considered.

Infotainment

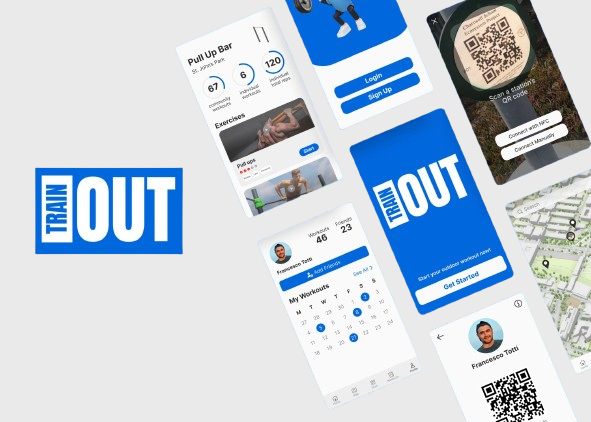

In-Vehicle Infotainment Systems (IVIS) allow drivers to perform a variety of tasks (entertainment, navigation, communication and more) while driving, typically by interacting through touch or voice with a screen placed at the top of the centre console of the vehicle.

Today, a vast share of the IVIS market is dominated by Google's "Android Auto" and Apple's "CarPlay".

These are systems that seamlessly connect and integrate smartphone functionalities with the IVIS and offer an interface designed to be used while driving.

Android Auto and Apple CarPlay are two of the most popular In-Vehicle Infotainment Systems (IVIS).

Android Auto (left) and Apple CarPlay (Right)

Goals

The goals of our research are twofold.

Firstly, we aim at measuring and comparing the usability of Android Auto and CarPlay by performing a series of usability tests in a realistic setting.

Secondly, we aim at measuring and comparing the impact that these systems have on visual attention by analyzing the gaze patterns of the participants and measuring their performance in a simulated driving task.

For more details on goals and hypothesis see the Full Report.

Our goal was to measure and compare their usability and their impact on visual attention at the wheel.

Realistic Lab Setting

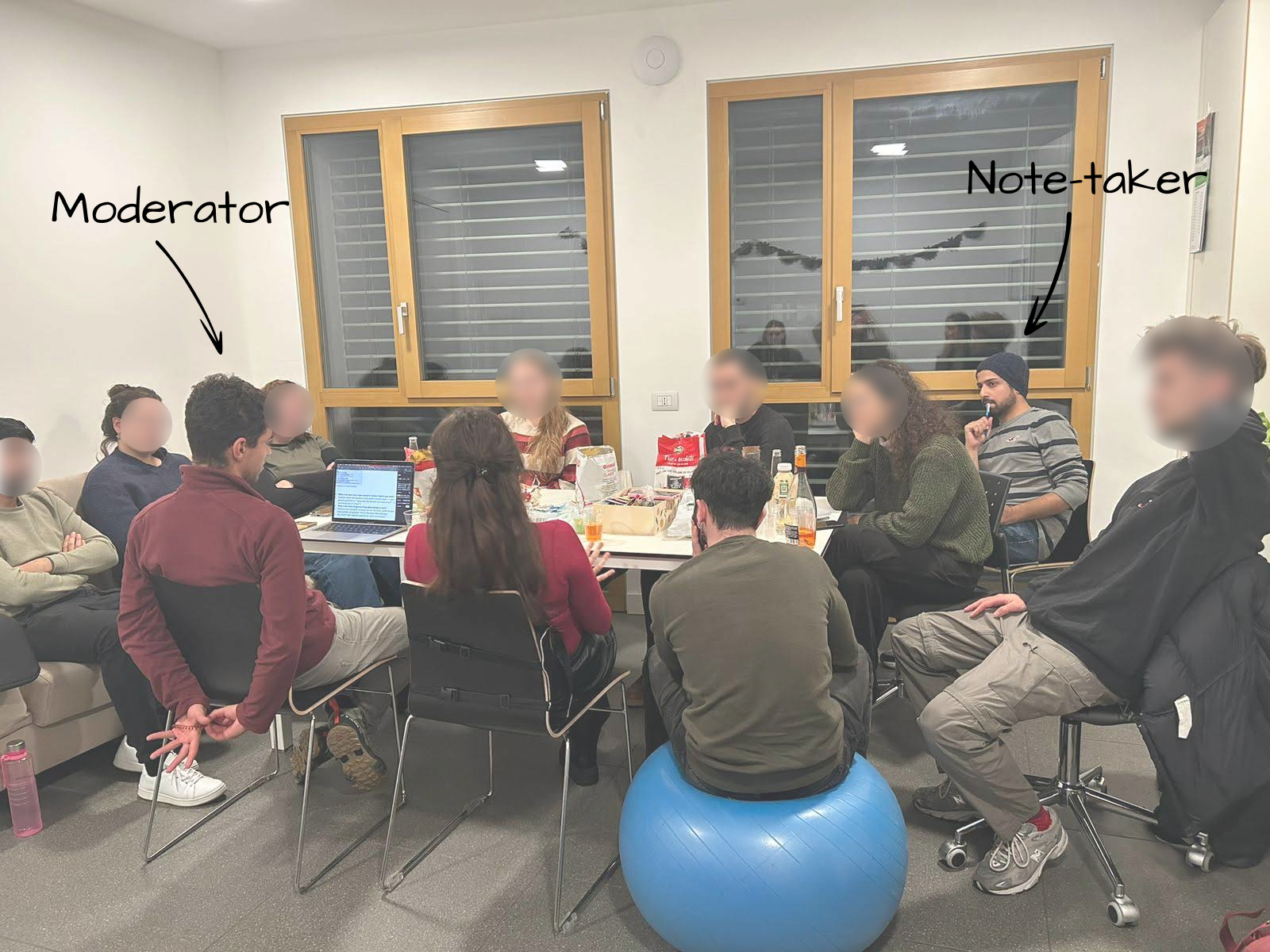

In order to effectively study these systems the standard usability testing setup where we sit the user in front of a screen, as shown in the image below, won't suffice, since it wouldn’t reflect the patterns of interaction likely to happen during actual use.

A standard usability test setup wasn't going to work for this study.

The standard usability testing setup won't work in this situation. NN Group

To obtain a realistic result, we needed to recreate the same attentional demands, ergonomic constraints, and patterns of interaction, that the user will experience while driving.

For example while driving users will not be able to continuously look at the interface, they will have to pay attention to the road and choose appropriate times to look at the screen with quick glances, and interact with it using only one hand, maybe even while not looking at the screen.

We needed to conduct the test in a setting that would replicate the attentional demands, ergonomic constraints and patterns of interaction seen while driving.

Interacting with an IVIS in the "real word"

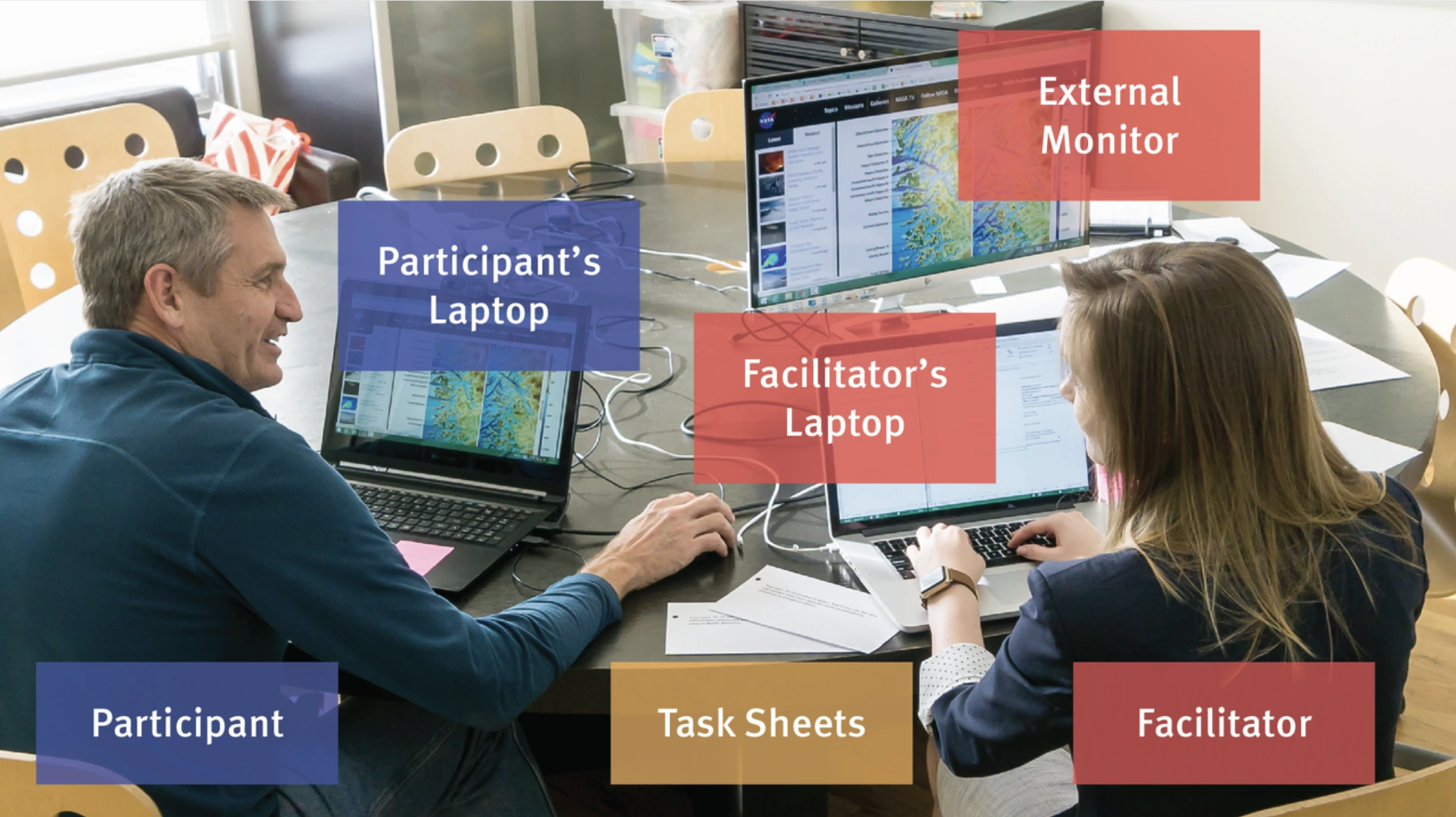

To achieve a realistic setting we conducted the experiment inside a stationary vehicle (2018 Ford Fiesta), equipped with a standard 8-inch IVIS screen on the center console. This meant the participants were subject to realistic constraints in terms of screen size, position, distance and orientation.

Laboratory Setup

We placed participants in a car and gave them two simultaneous tasks: a task on the IVIS and a simulated "driving task".

Laboratory Setup

Gaze Detection

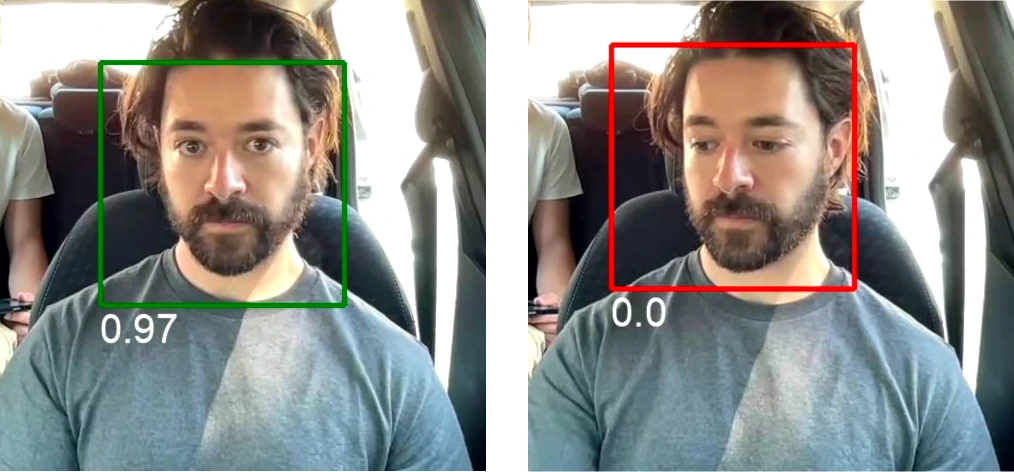

Video of the participants performing the task was recorded through a webcam placed in front of them, right on top of the screen displaying the driving task. Thanks to a pre-trained gaze detection model originally meant to detect eye contact (Chong et al. 2020), we were able to measure with good accuracy when participants were looking “at the road” (driving task) and when they weren’t. This gave us a direct measure of overt visual attention.

We analyzed the gaze of participants during the test to measure overt visual attention.

Gaze-detection algorithm at work.

(Published with participant's consent)

Driving Task

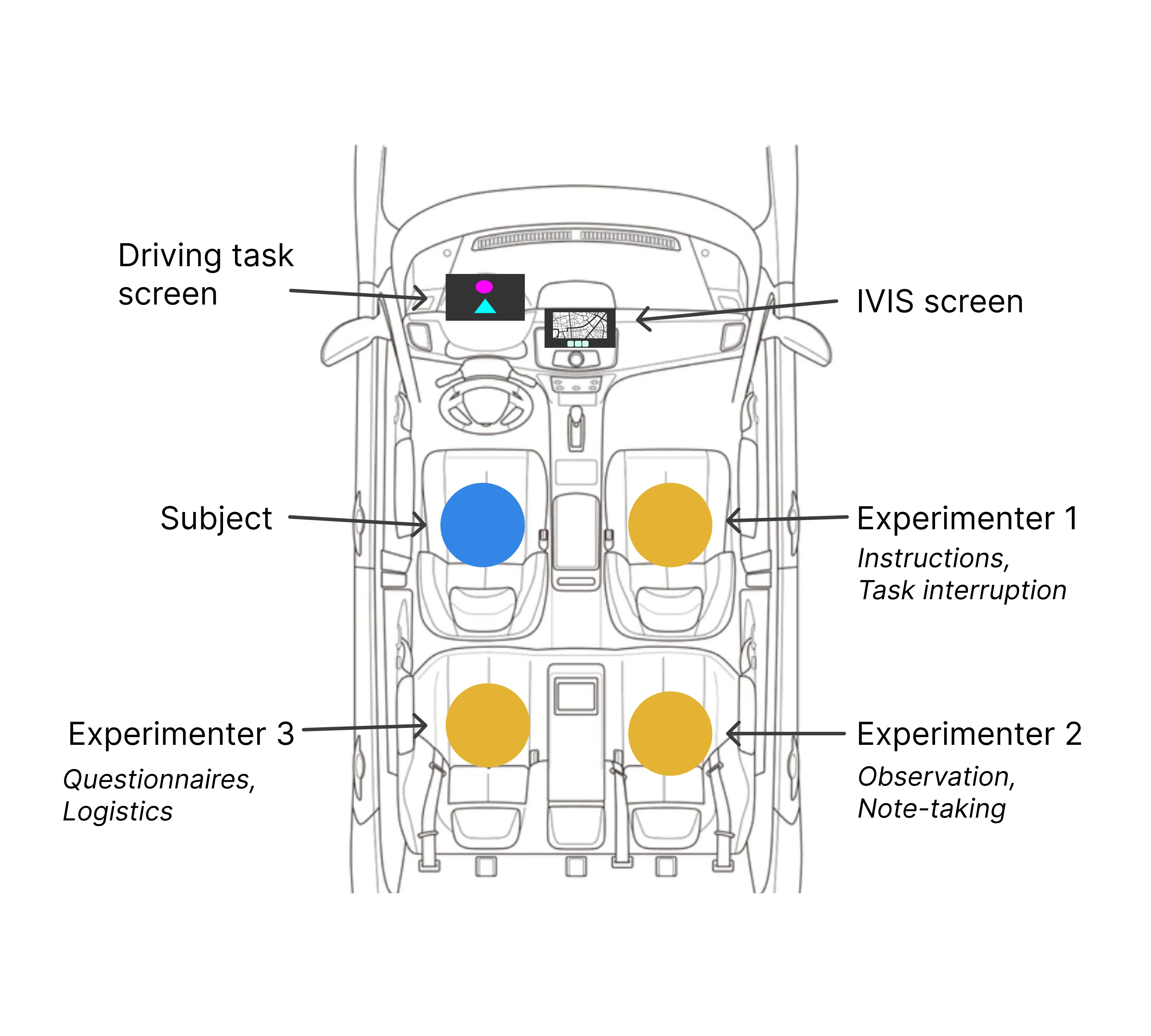

We devised a kind of “Driving Task”: A task that would elicit similar attentional demands as driving and produce a more realistic interaction pattern with the IVIS.

During the experiment participants had to perform the driving task and simultaneously perform a task on the IVIS.

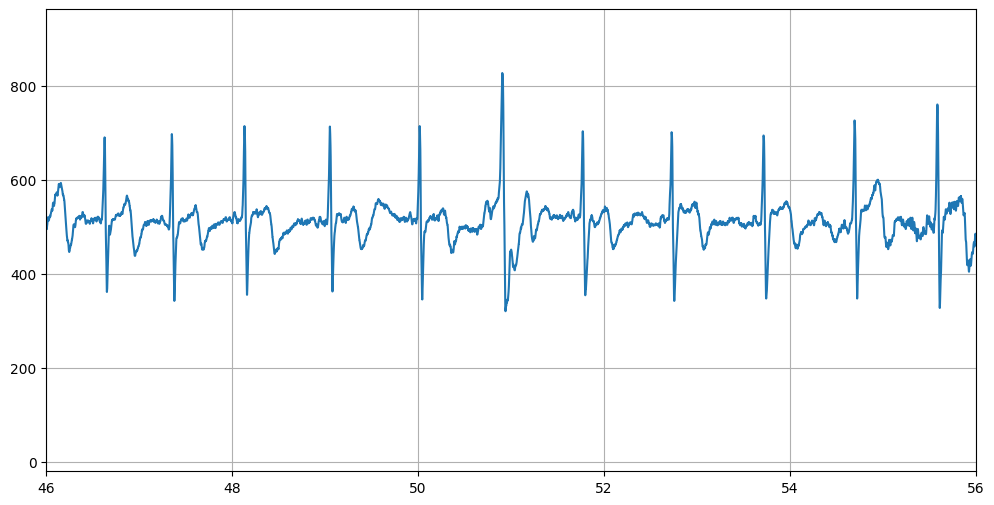

The driving task we chose is a kind of pursuit tracking task, adapted from a similar research on driver distraction (Castro et al. 2019), in which a screen is placed above the steering wheel, and on it a circle and a triangle are displayed.

The circle moves from side to side, in a manner that appears to be random, while the triangle is moved from side to side by the participant, who is instructed to keep it as close as possible to the circle. The participant moves the triangle using a wireless one-handed joystick (JoyCon), kept in his left hand (since the right hand will be used to interact with the IVIS).

What makes this task preferable over classic RT tasks is that it gives a continuous measure of performance, calculated as the mean absolute error (distance between circle and triangle). Since performance is proportional to the amount of visual attention paid by the participant, it provides a reliable indirect measure of visual attention over the duration of the whole trial, while in a RT or GO/NO-GO task, the measure of performance will be more "discrete" (we only know if the participant is paying attention at the time the target is presented).

We adapted a driving task from the existing literature. Its role was to elicit similar attentional demands as driving, in order to produce a realistic interaction pattern with the IVIS.

The driving task

We also measured driving task performance as an indirect measure of attention.

The driving task

IVIS Tasks

While performing the driving task, participants also performed tasks on the IVIS, such as setting a destination on the navigation system, playing music, and making phone calls. These tasks were chosen to represent common interactions that drivers have with infotainment systems.

Experiment Design

In this project we followed a within subject approach, meaning all participants performed all three tasks on both platforms in a randomized order.

Before beginning participants filled an introductory questionnaire about demographics and familiarity with the platforms used in the experiment.

This was followed by a familiarization trial, necessary to get participants acquainted with the driving task and reduce carryover effect due to practice.

The familiarization trial was followed by a control trial, again with the driving task only, to set a performance baseline for each participant.

Then the experimental trials began, in which participants performed both the driving task and the IVIS task simultaneously. Each participant performed six such trials: one for each IVIS task (Music, Calling and Navigation) on both platforms (AndroidAuto and CarPlay).

Following a between subjects approach, we took steps to minimize carryover effects between tasks and platforms.

After each IVIS task participants filled an SEQ questionnaire, and after completing all the tasks on a platform they also filled a SUS questionnaire, rating the usability of the platform overall.

Standardized usability questionnaires were also used to gain subjective measures of usability from the participants.

Vehicle setup schematic

Testing the setup

Data Analysis & Results

Note: for more detailed information check the Full Report.

We conducted a series of both descriptive and inferential statistical tests.

We performed an inferential statistical analysis using as a main statistical tool, a two-way ANOVA with repeated measures and found no significant main effect of the platform on both time on task and driving task performance (𝑝 > 0.05).

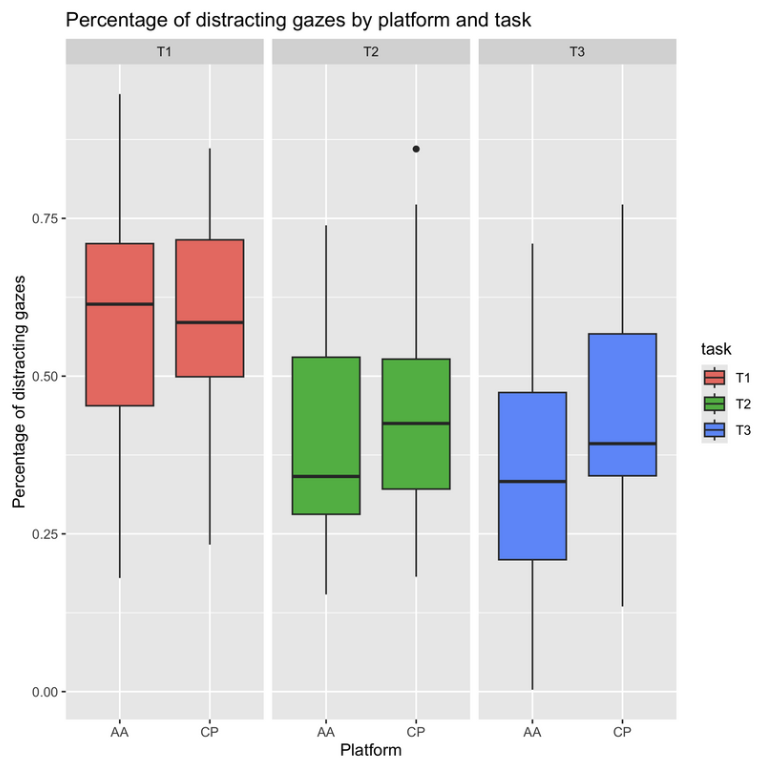

However, The ANOVA results for visual attention (distracted gaze) revealed significant differences for both the main effect of the platform and the type of task (𝑝 < 0.05).

A post-hoc analyses of the task pairs, performed with three ad-hoc two sample t-tests adjusting for Bonferroni’s correction, indicated significant differences in time on task, driving task performance, and percentage of distraction for Task 3 (Phone Call).

The results of these statistical analyses demonstrate that the platform did not significantly impact time on task and driving task performance.

We found no significant differences for either SUS or SEQ, which means that the two platforms are perceived to be similar in terms of usability.

In addition to quantitative measures, qualitative data was collected through interviews and observations for each user to support and contextualize the findings (full report

% of time looking at the IVIS (distracted) by platform and task

It is to be noted that this research project is not published research, and although carried out following rigorous and precise scientific methods, does have some limitations, for more details refer to the full report below.

Full Report

References:

1. Cognitive workload measurement and modeling under divided attention - SC Castro, DL Strayer, D Matzke, A Heathcote.

2. Detection of eye contact with deep neural networks is as accurate as human experts - E Chong, E Clark-Whitney, A Southerland, et al.

(Complete reference list in the Full Report)